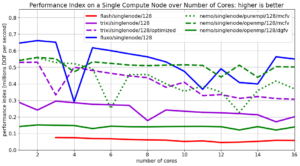

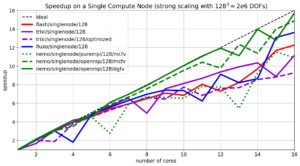

We compare the runtime performance of four different magnetohydrodynamics codes on a single compute node on the in-house high performance cluster ODIN. A compute node on ODIN consists of 16 cores. We run the ‘3D Alfven wave’ test case up to a fixed simulation time and measure the elapsed wall clock time of each code minus initialization time and input/output operations. For each run the number of cores is successively increased. This allows us to get insights into the scaling behavior (speedup gain wih increasing number of cores) on a single compute node. Furthermore we plot the performance index (PID) over the number of nodes which is a measure of throughput, i.e. how many millions of data points (degrees-of-freedom/DOF) per second are processed by the each code.

The four contestants are:

- Flash [1] with Paramesh 4.0 and the Five-wave Bouchut Finite-Volume solver written in Fortran

- Fluxo [2] an MHD Discontinuous Galerkin Spectral Element code written in Fortran

- Trixi [3] an MHD Discontinuous Galerkin Spectral Element code written in Julia

- Nemo an in-house prototype code providing a 2nd order monotonized-central MHD-FV scheme (MCFV) and a hybrid MHD Discontinuous Galerkin Spectral Element / Finite Volume scheme (DGFV) written in Fortran.

Trixi uses a thread-based parallelization approach while Flash, Fluxo and Nemo

use the standard MPI method. Furthermore, Nemo also provides OpenMP-based

parallelization.

[1] https://flash.uchicago.edu/site/

[2] https://github.com/project-fluxo/fluxo

[3] https://github.com/trixi-framework/Trixi.jl