Earth’s climate is changing due to human activity. Therefore, the ability to predict future climate change is of utmost importance if we want to reduce the human interference with the climate system (climate change mitigation) and our vulnerability to the harmful effects of climate change (climate change adaptation).

It is possible to model the time-dependent local temperatures on the globe using a simple two-dimensional energy balance model [1,2],

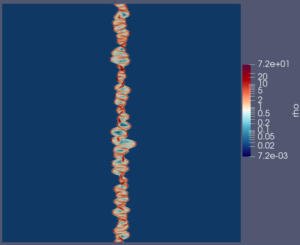

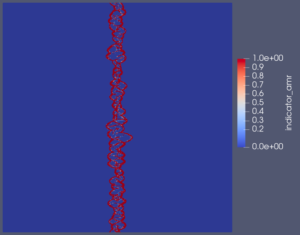

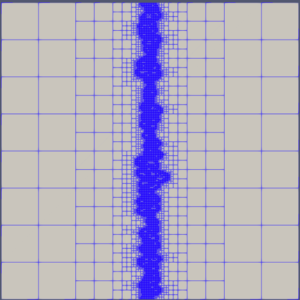

$$ C(\vec{x}) \frac{\partial T}{\partial t} + A + BT = \nabla \cdot \left( D(\vec{x}) \nabla T \right) + Q S(\vec{x},t) (1-a(\vec{x})), $$

where $T$ is the local temperature; $C(\vec{x})$ is the local heat capacity for a column of air on land, water or ice; the term $A+BT$ models the outgoing long-wave radiation, where $A$ depends on the amount of green-house gases in the atmosphere and $B$ models several feedback effects; $D$ is a latitude-dependent diffusion coefficient; and the last term includes the heat input in the system, where $Q$ is the solar radiation at earth’s position in the solar system, $S$ is the seasonal and latitudinal distribution of insolation, which depends on orbital parameters, such as the eccentricity of earth’s orbit, and the precession and tilt of earth’s rotation axis, and $a(\vec{x})$ is the local albedo, which measures the reflection of solar radiation and depends on the surface cover.

We developed a julia code that implements the EBM model on the sphere surface and used it to describe the mean temperatures on the globe assuming a constant atmospheric CO$_2$ concentration (of year 1950). The results are presented in the animation.

[1] North, G.R.; Mengel, J.G.; Short, D.A. (1983). Simple Energy Balance Model Resolving the Seasons and the Continents’ Application to the Astronomical Theory of the Ice Ages. Journal of Geophysical Research.

[2] Zhuang, K.; North, G.R.; Stevens, Mark J. (2017). A NetCDF version of the two-dimensional energy balance model based on the full multigrid algorithm. SoftwareX.